Point spread functions

The point spread function of an imaging system is simply the impulse response of the system. In terms of digital cameras, this can be understood as the photo of an infinitely thin beam of light head-on, somewhat like taking a picture of a star. The camera pixels have a fixed, finite size, but the hypothetical beam of light is infinitely thin, so how does the camera sensor represent such an input? The short (but approximate) answer is that the average light over the physical area of the pixel is recorded by the sensor. Unfortunately, this approximation does not produce a well-sampled image, and would lead to severe aliasing. The solution is to apply some low-pass filtering to the incoming light to remove (or at least strongly attenuate) the spatial frequencies above the Nyquist limit of the sensor. In plain English: you have to blur the light before it reaches the sensor if you want to minimise sampling artifacts.A suitable filter for this task must spread the light of our hypothetical infinitely thin beam over several adjacent pixels. This is fairly simple to prove, but requires considerable background knowledge of sampling theory and frequency domain representations of images, so I will have to treat this as a given fact in this article.

Such a blurring filter is found in most DSLR cameras, and is often called the Anti-Aliasing (AA) filter, the Low-Pass filter, or the Optical Low-Pass Filter (OLPF). Back to our example: if we capture an image of our thin beam with a sensor that has an AA filter, we will see something like this (magnified for visualisation here):

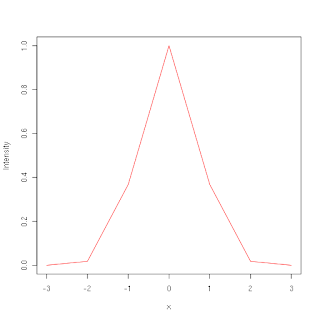

This image is a direct visualization of the actual PSF. An intensity cross-section of this image will look like this:

Lo and behold, it looks like a Gaussian distribution! This is, of course, only an approximation, but in my experience Nikon OLPFs are quite close to a Gaussian, so this is not a terrible assumption for DSLRs.

The x axis of the cross-section above is marked in units of pixels, with the origin being treated as the centre of the pixel to which this PSF belongs. In other words, if we are generating a synthetic image with a given PSF, we should place that PSF at the centre of each pixel that we wish to render. The sample PSF above is substantially more blurry than what you would find in a real camera, but even here we can see that the bulk of the distribution is only 4 pixels wide in terms of the desired target image's pixel size.

Direct Gaussian PSF simulation by blurring

Let us assume that we are interested in generating synthetic images with a Gaussian PSF. We want to render black rectangular targets on white backgrounds to obtain images that look like this:The most naive method for rendering a synthetic image would be to take our target image (generated by drawing it in a paint program, for example) and blur it with the desired Gaussian PSF --- this is easily done with something like GIMP or ImageMagic. While this method may be suitable for a very quick-and-dirty implementation, it is far from ideal because is only samples the PSF at integer multiples of the pixel spacing, producing the following discretized PSF:

Clearly, this is a very crude approximation of the smooth PSF above, and this approximation is even worse for narrower Gaussians --- imagine if the width of the Gaussian is only 1 pixel in stead of 4 as shown here.

Direct Gaussian PSF simulation with oversampling

The first refinement would be to start with a higher resolution image. You could start by drawing your target at 4 times the final resolution. The Gaussian blur is then applied (after adjusting its scale) to the higher resolution image, followed by downsampling. In this case, downsampling would mean taking only every 4th pixel from the high resolution image after the blur has been applied. At 4x oversampling, the PSF approximation would look like this:This is already much better. If we keep on increasing the oversampling factor, we should eventually obtain a good enough approximation, but our oversampled image quickly becomes unmanageable large (e.g., 128x oversampling would consume 16384 as much memory as our final image).

Inverse Gaussian PSF rendering basics

A better way of rendering the image is to reverse our thinking. First, imagine that we are not applying the blur to the high resolution image, followed by downsampling, but instead we position our PSF at the centre of a given pixel in our final image. This PSF is then mapped back onto the high-resolution image, where the PSF is used to compute a weighted sum of intensity valued taken from the high resolution image. Effectively, we choose our target pixel from the final image, and go back to the high resolution image to perform weighted sampling. In this form we are not gaining anything yet, but it should be understood that this formulation is functionally equivalent to the direct approach.The next step is to abandon the manual generation of the high resolution image altogether. For simple targets, such as rectangles, we can easily provide an analytical description: if we know the position of the four corners of the rectangle, then we can easily test whether a point is inside the rectangle or not. Using this analytical description, we can now render the final image without keeping the entire high resolution image. We proceed to centre the PSF at each pixel in the final image. This PSF is then mapped back to the coordinate system in which the analytical description of the target is defined.

Now we have a few options. The first approach would be discrete sampling: for each pixel in the final image, project a grid of closely spaced (something like 16x oversampling or more) points around the pixel centre onto the analytical target. Test each point to see if it is inside the target (rectangle) or not, and evaluate the PSF at the same location to accumulate the weighted sum over this fine grid. This works, but Gaussian PSFs with large standard deviations (wide Gaussians) would require larger sampling grids, e.g., 7x7 pixels or more at 16x oversampling, so this strategy becomes inefficient.

Enter importance sampling

A much better strategy is to have a non-uniform set of points in stead of a grid of evenly spaced sampling points. The best distribution of these points would be one that matched the density of the PSF itself. For a Gaussian, we can use something like the Box-Muller transform or Moro's inversion to transform numbers in the range [0,1] to match the desired Gaussian distribution. Using quasi-random samples in stead of uniform or randomly spaced samples, we can get equally good results with fewer samples.Thus, we follow the same steps as with plain inverse sampling: project the PSF centred at each pixel in the final image back onto the coordinate system in which the analytical target (rectangle) is defined. For each sampling point, test to see whether it is inside the target, or not. Note that because our sampling points already follow the PSF's density distribution, we should not weight our samples with the PSF again. Just count the number of samples inside the rectangle, and divide by the number of samples in total to obtain the correct intensity for each pixel.

This method works reasonably well, and has the advantage that it automatically adapts the sampling density according to the width of the PSF, while keeping the computational cost per pixel constant. I have had reasonably good results using 2025 samples per final pixel. But can we do even better with comparable computational cost?

Direct integration of the PSF over the target

The importance sampling method is really just Monte Carlo integration of the PSF over the region defined by the target rectangle. If you are happy with this statement, then you are ready for the next (and final) method: Directly computing the integral of the PSF over the rectangle.It helps to visualise this step as follows: Imagine the PSF centered at a given pixel as a red dot superimposed on the target rectangle.

Suppose that the PSF is quite wide, with a standard deviation of around 6 pixels, i.e., a width of about 30 pixels. If we represent the rectangular region as a slightly raised platform above the background, and the PSF superimposed on top of that, we obtain something like this:

or from a different angle,

The final intensity of the pixel (centered at the peak of the PSF surface) should be proportional to the are under the curve. Note how the integral of the PSF surface is limited to the region defined by the rectangle.

Given that our PSF is a Gaussian, this boils down to evaluating the double integral of the PSF over a the rectangular region which serves as our target object. There is no closed-form solution to this integral. In one dimension, the integral of a Gaussian is often called the error function or erf(x). There is still no closed form solution, but good polynomial approximations exist.

If we thus restrict ourselves to radially symmetric Gaussian PSFs, we can decompose the computation of the double integral into two parts. Imagine a thin horizontal slice of our rectangle, illustrated with a green line here:

We only want to integrate inside the rectangle, so we can compute the intersection of the horizontal line with our rectangle to find the integration limits. The cross section of the PSF along this line is simply a Gaussian centered at our target pixel. The height of this horizontal Gaussian is determined by the vertical cross section of the PSF at our pixel centre.

We can directly compute the integral of the horizontal cross-section using the error function, and we simply scale the result according to vertical Gaussian cross section of our PSF (using the distance from our horizontal line to the PSF centre as argument).

If we proceed to slice our rectangle into such horizontal lines, it becomes clear that we are evaluating the numerical integral in the y direction. I settled on the adaptive version of Simpson's rule, mostly because it is straightforward to implement. This method is comparable to the importance sampling method above using 2025 samples per pixel in terms of run time, but produces significantly more accurate images.

Some care should be take to ensure that the adaptive integration does not "skip over" parts of the PSF, but the details can be found by reading the mtf_generate_rectangle source code.

This last method is quite accurate, yielding synthetic images that have PSFs that are extremely close to the expected analytical PSFs --- see part 2 of the accuracy validation experiment.

Variations and improvements

This method can easily be adapted to convex polygon targets, or even integration over elliptical targets. If more than one target must be rendered in a single image, then you just accumulate the PSF integral over each of the target objects. This will clearly become rather slow if you have many target objects, but spatial partitioning such as used in ray tracing should help to speed up rendering.I have assumed a stationary PSF throughout, but it is straightforward to modify the method to vary the standard deviation of the Gaussian according to the pixel's position in the image. The numerical integration method can also easily accommodate Gaussian PSFs with differing horizontal and vertical standard deviations.

The more general case of a astigmatic Gaussian PSF, i.e., where the PSF contours are ellipsoidal, and the orientation of the PSF varies across the image, can be accommodated by rotating the targets around the centre of the PSF until the ellipsoidal contours are aligned with the x and y directions.

This was really useful. You're right, this info is not easy to find in most textbooks. Thanks.

ReplyDelete